how to Enhancing Neural Network Accuracy: Key Training Steps Explained

how neural networks enhance their accuracy through training. Explore the roles of data input, loss functions, backpropagation

Outline

- Introduction to Neural Network Training

- Data Input: The Foundation of Learning

- Understanding the Loss Function

- The Role of Backpropagation in Training

- Setting the Learning Rate

- The Importance of Iterations and Epochs

- Techniques to Prevent Overfitting: Regularization and Dropout

- Conclusion

- FAQs

Introduction to Neural Network Training

Neural networks are akin to a complex web of neurons that mimic the human brain's operations to process data and make decisions. Training these networks is crucial for improving their ability to make accurate predictions. The process involves several fundamental steps that enable these models to learn from data and adjust their parameters accordingly.

Data Input: The Foundation of Learning

The initial step in neural network training involves feeding the network a large and diverse dataset. This dataset should be relevant to the task the network is designed to perform. The quality and variety of the data determine how well the network can recognize patterns and capture relationships within the data, setting the stage for all subsequent learning.

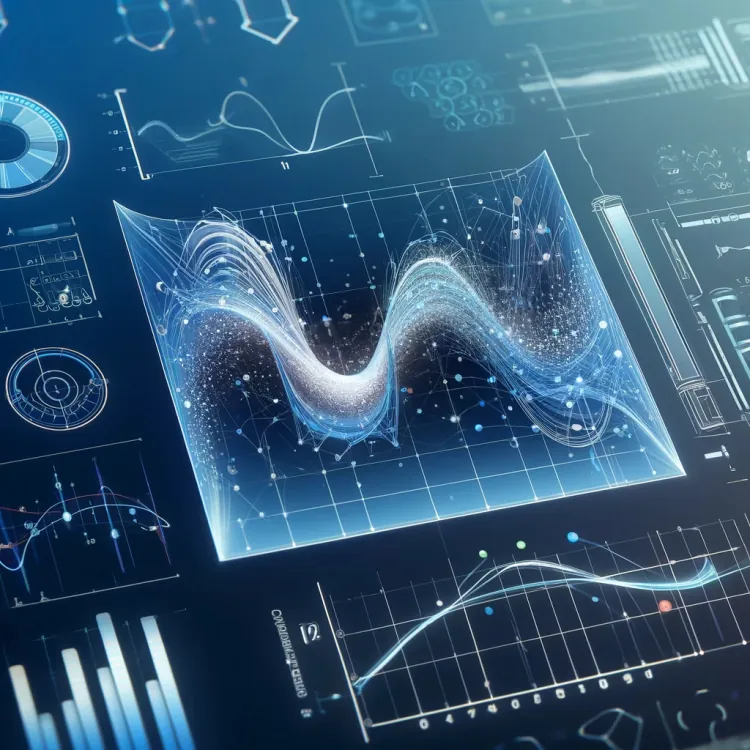

Understanding the Loss Function

At the heart of the training process is the loss function. This mathematical function quantifies the difference between the predicted outputs of the network and the actual outcomes. The primary goal of training is to minimize this loss, which would indicate that the network's predictions are aligning closely with real-world data.

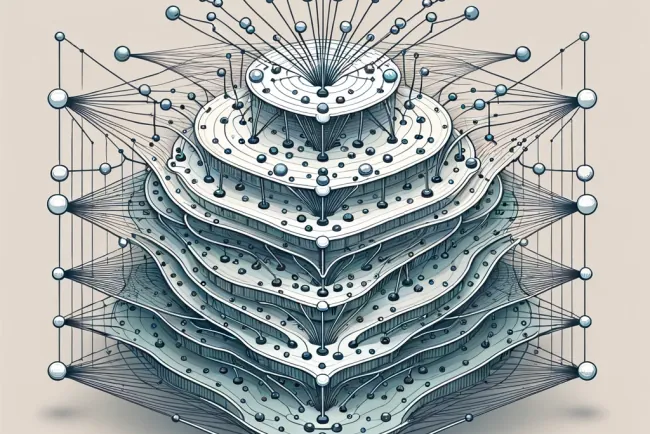

The Role of Backpropagation in Training

Backpropagation is a critical mechanism for optimizing the neural network's weights. After each output prediction, the network calculates the loss and uses this error to make adjustments to the weights of neurons, working backwards from the output layer to the input layer. This step is crucial for refining the network's accuracy over time.

Setting the Learning Rate

The learning rate is a parameter that influences how much the weights are adjusted during training. It needs to be carefully set to balance the speed of learning and the accuracy of the adjustments. Too high a rate can cause the network to overshoot optimal weights, while too low a rate can slow down the learning process excessively.

The Importance of Iterations and Epochs

Training a neural network is not a one-time process but involves multiple iterations and epochs. An iteration refers to a single batch of data being passed through the network, while an epoch represents one complete cycle of the entire dataset being processed. Through these repeated cycles, the network fine-tunes its weights and biases to reduce the loss, gradually improving its predictive accuracy.

Techniques to Prevent Overfitting: Regularization and Dropout

Overfitting is a common challenge in training neural networks, where the model performs well on training data but poorly on unseen data. Techniques like regularization and dropout are employed to prevent overfitting. Regularization adds a penalty on larger weights, and dropout randomly ignores certain neurons during training, encouraging the network to develop redundant pathways and thus generalize better.

Conclusion

Neural networks improve their accuracy through a complex but systematic training process. By understanding and effectively implementing each step—from data input to regularization and dropout—these networks can learn to make highly accurate predictions, becoming more reliable and efficient over time.

FAQs

What is backpropagation in neural networks? Backpropagation is a method used to optimize the weights of a neural network by adjusting them in reverse order, starting from the output towards the input.

How does a neural network avoid overfitting? Neural networks use techniques like regularization, which penalizes large weights, and dropout, which randomly deactivates neurons during training, to prevent overfitting.

What is the significance of the learning rate in neural network training? The learning rate determines how much adjustment is made to the weights after each batch of data is processed. It balances the speed of learning against the stability of the convergence process.

What's Your Reaction?