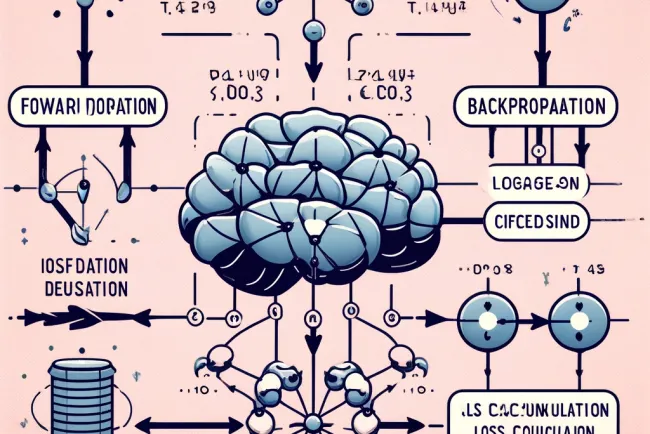

Functions of Hidden Layers in Neural Networks

functions of hidden layers in neural networks, from feature transformation to regularization for better model performance.

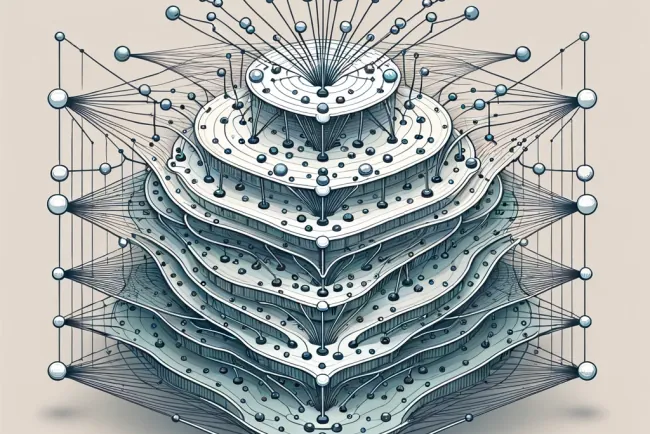

Hidden layers in neural networks are crucial as they perform the bulk of the computational work needed to derive meaningful patterns and features from the input data. Here are the key functions of hidden layers:

1. Feature Transformation

Hidden layers transform input features into formats that are easier for the network to model. Each layer can be thought of as learning a more complex set of features based on the simpler outputs from the previous layer. For example, in image processing, early layers might detect edges and textures, while deeper layers might recognize more complex patterns like shapes or objects.

2. Non-linear Mapping

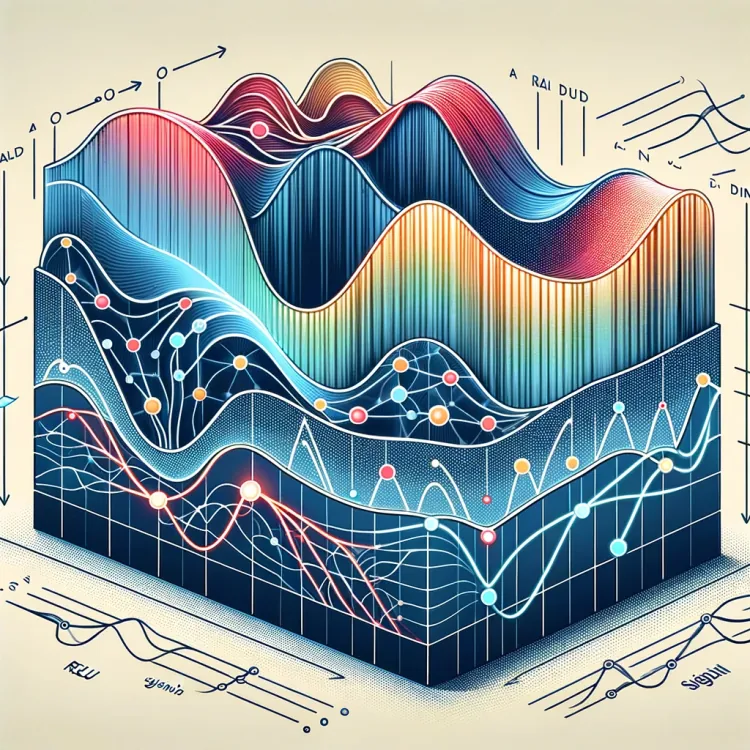

Hidden layers apply non-linear transformations to the inputs they receive. This is crucial because most real-world data are non-linear, meaning complex patterns cannot be learned with linear models. Activation functions like ReLU (Rectified Linear Unit), sigmoid, and tanh are used in these layers to introduce non-linearity, allowing the network to learn and model complex patterns.

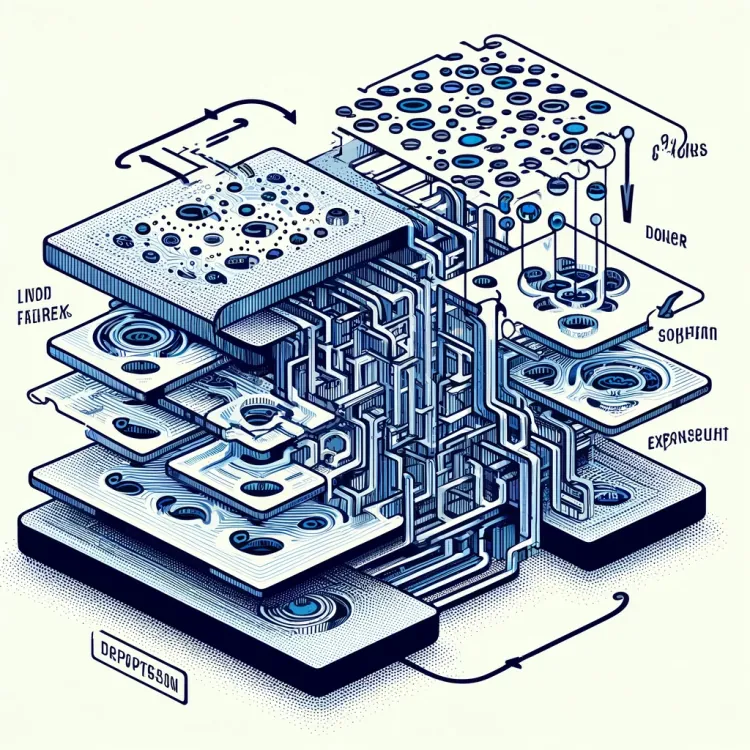

3. Dimensionality Reduction and Expansion

Hidden layers can also manipulate the dimensionality of the input data. Some layers may reduce the number of features, simplifying the input (similar to feature selection or extraction). Others may increase dimensionality to capture more details and interactions between features. This manipulation helps in fine-tuning the abstraction level the network operates at, optimizing performance for specific tasks.

4. Hierarchy of Features

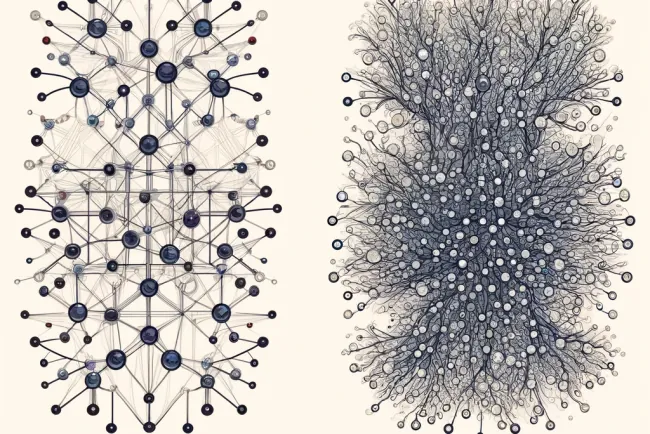

As data progresses through successive hidden layers, the abstraction level increases. In deep networks, lower layers often capture general features, while higher layers interpret these features to form more specific conclusions. This hierarchical learning is similar to how human cognition processes information, starting from basic perceptions to complex reasoning.

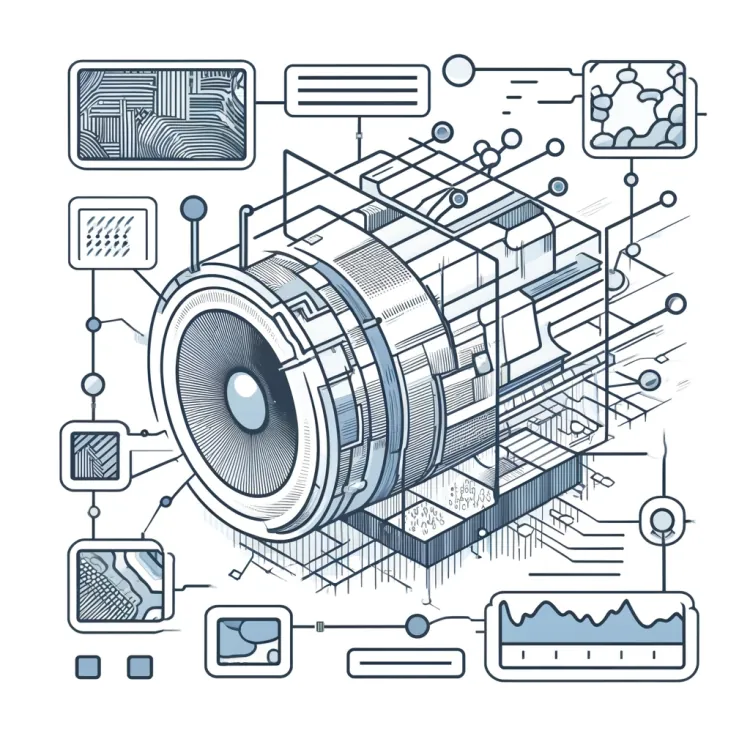

5. Spatial and Temporal Feature Learning

In networks designed for image and video processing (like Convolutional Neural Networks, CNNs) or sequential data processing (like Recurrent Neural Networks, RNNs), hidden layers are specialized to handle spatial and temporal data, respectively. CNNs use filters to capture spatial hierarchies in images, while RNNs use their internal state (memory) to process sequences of data, capturing temporal patterns.

6. Regularization and Generalization

Beyond just processing, hidden layers also contribute to the model's ability to generalize (perform well on unseen data) through techniques embedded within the layer structure, such as dropout. Dropout randomly turns off a subset of neurons during training, which prevents the network from becoming too dependent on any single neuron and promotes a more distributed representation.

These functions make hidden layers indispensable for complex pattern recognition and decision-making tasks in neural networks, enabling them to tackle a wide range of problems from various domains.

FAQs

What are hidden layers? Hidden layers are the intermediate layers in neural networks, located between the input and output layers, where most data processing occurs.

Why are non-linear activation functions important? Non-linear activation functions allow neural networks to learn complex patterns in data that linear models cannot, essential for modeling real-world phenomena.

How do hidden layers improve model generalization? Hidden layers use techniques like dropout to ensure the network does not overfit to the training data, enhancing its performance on new, unseen data.

Further Resources

For those interested in diving deeper into the mechanics of neural networks, a variety of resources are available, including advanced courses and comprehensive readings on deep learning.

This comprehensive exploration provides clear insights into how hidden layers function within neural networks, emphasizing their importance in modern machine learning landscapes. For further information and resources, visit our detailed guides and courses here.

What's Your Reaction?